Bug bounty programs have become increasingly popular in recent years, with companies offering rewards to hackers and security researchers who can find vulnerabilities in their systems. While there are many tools available to help with bug hunting, writing your own scripts can be a great way to automate and streamline the process. In this article, we will walk through the steps to set up a bug bounty script with Python and Bash that can help you monitor subdomains for any potential vulnerabilities.

Prerequisites

Some prerequisites to setting up your bug bounty script with Python and Bash include:

- Installing Python 3 on your machine: You can visit the official Python website to download and install the latest version of Python.

- Installing the Requests and Beautiful Soup libraries: You can use the pip package manager that comes with Python to install these libraries. Simply run the following command: pip install requests beautifulsoup4

- Familiarity with Python and Bash: It is helpful to have a basic understanding of programming concepts and how to write scripts in Python and Bash.

- Access to a website or system to monitor: In order to test and use your script, you will need access to a website or system to monitor for vulnerabilities. Be sure to get permission before attempting to access any systems or websites.

- Knowledge of ethical hacking guidelines: Always follow ethical hacking guidelines and make sure to get permission before attempting to access any systems or websites.

Step 1: Setting up your environment

Before you can start writing your script, you need to have the necessary tools and libraries installed on your machine. This includes Python, Bash, and any additional libraries or modules that you will be using. For this tutorial, we will be using Python 3 and the Requests and Beautiful Soup libraries.

To install Python 3, you can visit the official website and download the latest version for your operating system. If you are using a Unix-based system such as Linux or macOS, Python may already be installed. You can check the version by running the following command:

python --versionTo install the Requests and Beautiful Soup libraries, you can use the pip package manager that comes with Python. Simply run the following command:

pip install requests beautifulsoup4Step 2: Setting up your script

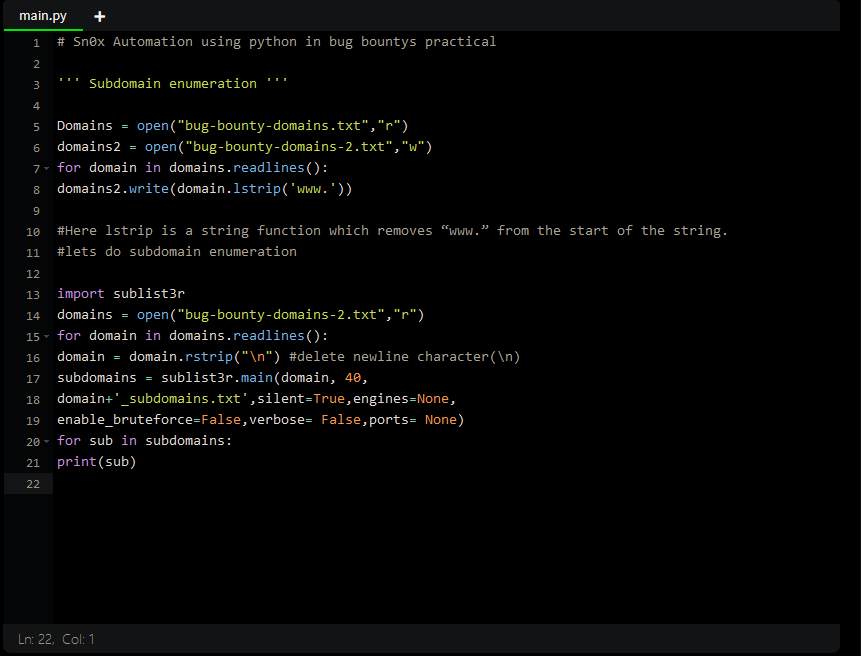

Now that you have your environment set up, it’s time to start writing your script. First, you need to import the necessary libraries and modules into your script. For this tutorial, we will be using the Requests library to make HTTP requests and the Beautiful Soup library to parse the HTML of the response.

import requests from bs4 import BeautifulSoupNext, you need to define a function to make an HTTP request to a given URL and return the response. This can be done using the Requests library’s get() function.

def get_response(url): response = requests.get(url) return responseStep 3: Parsing the HTML

Now that you have a way to make HTTP requests, you need to parse the HTML of the response to extract any useful information. For this tutorial, we will be using the Beautiful Soup library to parse the HTML and extract the list of subdomains.

To do this, you can define a function that takes the HTML of the response as an input and returns a list of subdomains. Here’s an example of how you might do this:

def parse_html(html): soup = BeautifulSoup(html, 'html.parser') subdomains = [] for link in soup.find_all('a'): subdomain = link.get('href') if subdomain.startswith('http'): subdomains.append(subdomain) return subdomainsStep 4: Monitoring for changes

Now that you have a way to make HTTP requests and parse the HTML of the response, you can start monitoring for changes in the list of subdomains. To do this, you can create a loop that makes an HTTP request to a given URL and checks the list of subdomains against a previous list. If there are any new or removed subdomains, you can alert the user.

Here’s an example of how you might do this:

prev_subdomains = [] while True: response = get_response(url) subdomains = parse_html(response.text) added_subdomains = list(set(subdomains) - set(prev_subdomains)) removed_sub

ains = list(set(prev_subdomains) - set(subdomains)) if added_subdomains: print('New subdomains found:') print(added_subdomains) if removed_subdomains: print('Subdomains removed:') print(removed_subdomains) prev_subdomains = subdomains time.sleep(60)Note that this example uses the time library to pause the loop for 60 seconds before making another HTTP request. This can be adjusted depending on how frequently you want to check for changes.

Step 5: Putting it all together

Now that you have all the necessary functions, you can put them all together in a single script. Here’s an example of how your final script might look:

import requests from bs4 import BeautifulSoup import time

def get_response(url): response = requests.get(url) return response

def parse_html(html): soup = BeautifulSoup(html, 'html.parser') subdomains = [] for link in soup.find_all('a'): subdomain = link.get('href') if subdomain.startswith('http'): subdomains.append(subdomain) return subdomains

url = 'https://example.com/subdomains' prev_subdomains = [] while True: response = get_response(url) subdomains = parse_html(response.text) added_subdomains = list(set(subdomains) - set(prev_subdomains)) removed_subdomains = list(set(prev_subdomains) - set(subdomains)) if added_subdomains: print('New subdomains found:') print(added_subdomains) if removed_subdomains: print('Subdomains removed:') print(removed_subdomains) prev_subdomains = subdomains time.sleep(60)With this script, you can monitor a given URL for any changes in the list of subdomains and alert the user if any are added or removed. This can be a useful tool for bug bounty hunters, as it can help identify potential vulnerabilities that may have been missed otherwise.

FAQ

Q: Can I use this script to hack into any website or system?

A: No, hacking into systems or websites without permission is illegal and can result in serious consequences. This script is meant to be used as a tool to help identify vulnerabilities in systems or websites that you have permission to access as part of a bug bounty program.

Q: Is this script foolproof?

A: No, no script is foolproof and it is important to use caution and good judgement when using any tools for bug hunting. It is always a good idea to thoroughly test and validate any vulnerabilities before reporting them.

Q: Can I use this script to find vulnerabilities in any system or website?

A: Not necessarily. Different systems and websites have different vulnerabilities and it is important to have a good understanding of the target system or website before attempting to find vulnerabilities.

Q: How often should I run this script?

A: The frequency at which you run this script will depend on your specific needs and the target system or website. It is a good idea to run the script regularly to ensure that you are catching any changes or updates to the subdomains, but be aware that running the script too frequently may result in unnecessary strain on the target system or website.

Conclusion

Remember to always follow ethical hacking guidelines and be sure to get permission before attempting to access any systems or websites. Happy bug hunting!

It is important to note that hacking into systems or websites without permission is illegal and can result in serious consequences. Always follow ethical hacking guidelines and make sure to get permission before attempting to access any systems or websites.

Additionally, be aware that using bug bounty scripts or any other tools for the purpose of finding vulnerabilities can potentially be damaging to the target system or website. Use caution and consider the potential consequences before using these tools.

Finally, remember that bug bounty programs are designed to help companies identify and fix vulnerabilities in their systems. They are not an invitation to exploit or cause harm to a system or website. Always act responsibly and follow ethical guidelines when participating in a bug bounty program.

Hope you enjoyed the article. Feel free to follow me and subscribe to my mailing list so that you can get the article whenever I’ll write the next part of this 🙂